Gaussian Process Motion Planner (GPMP)

Gaussian Process

ref:

- blog

- project

- paper

- book

Multivariate Normal Distribution (MVN) Mixtures of Gaussians (MoG)

Gaussian Process Regression

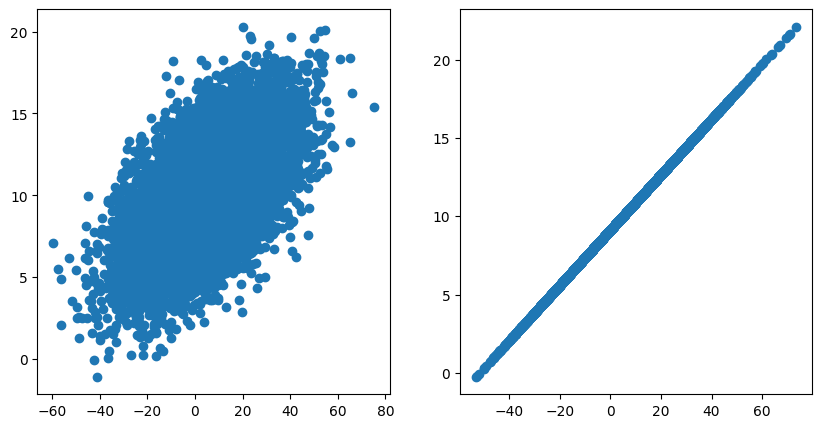

假设需要预测 ${x_i, y_i}$之间的数据关系。假设$x_i,y_i$都属于正态分布,则${x_i, y_i}$的数据关系图可以认为是两个数据的联合分布。假设${x_i, y_i}$的联合分布属于多变量高斯分布,则这个分布受制于高斯分布中的协方差矩阵。协方差矩阵描述了多个变量之间的制约关系,在离散数据点上是正定矩阵,而对于连续数据域则是协方差函数。数据关系图的不同实际上就是协方差函数的不同。因此可以把协方差定义为某些核函数,通过一步步确定核函数的表达式对数据关系进行拟合。

multivariate Gaussian:

协方差矩阵上下三角元代表变量之间的相关性,其值约大,线性相关度约大。

mu = [5, 10]

Sigma1 = array([[ 289, 30. ],

[ 30., 9. ]])

Sigma2 = array([[ 289, 51. ],

[ 51., 9. ]])

Kernel function ernel function:

计算高阶内积开销很大,因此把计算高阶函数的内积转化为计算内积的高阶函数.

极端一点,若$f(\cdot)$是无限维函数,则内积$

内积中的$f(x)$可以看作是把数据映射到特征空间,然后内积操作是衡量这些特征的距离。

the RBF(Radial Based Function) kernel :

径向基函数radial basis functions, RBF)是一类常用的核函数, 其特点是函数值只与特征向量的二范数距离有关,并随着距离的增大而减小,且解郁0-1之间,因此是一种有效的相似度衡量方法。

Periodic kernel: model periodic functions

with:

- $\sigma^2$ the overall variance, or amplitude

- $l$ the lengthscale

- $p$ the period

Local periodic kernel:

Combining kernels by multiplication: The local periodic kernel is a multiplication of the periodic kernel with the exponentiated quadratic kernel

Kernels can be combined by adding them together.

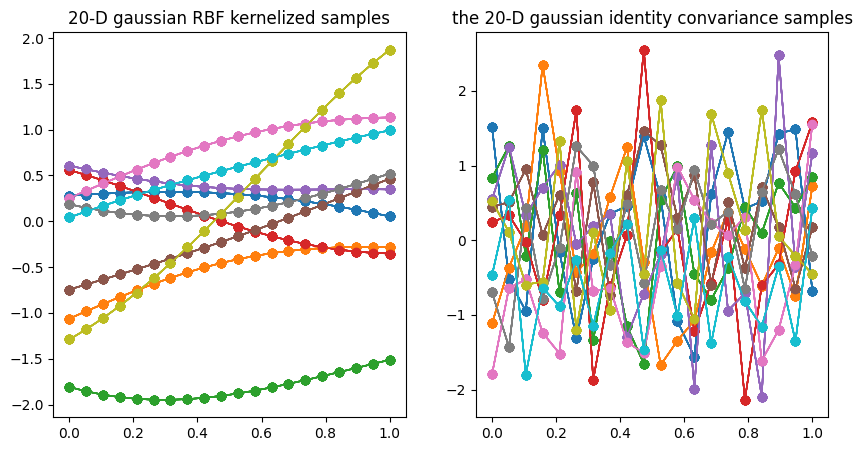

下图是 Multivariate_gaussian采样的对比。每一条线代表一个变量的采样,这种连续的曲线已经代表了变量之间的某种约束关系。右图是协方差矩阵为单位矩阵的结果,各个变量之间相互独立,因此采样点上下随机跳动。左图是添加RBF核函数作为斜方差矩阵的结果,可以看出采样点连接起来的线段比较平滑,说明变量之间已经存在制约关系,在概率的约束下有规律的波动。

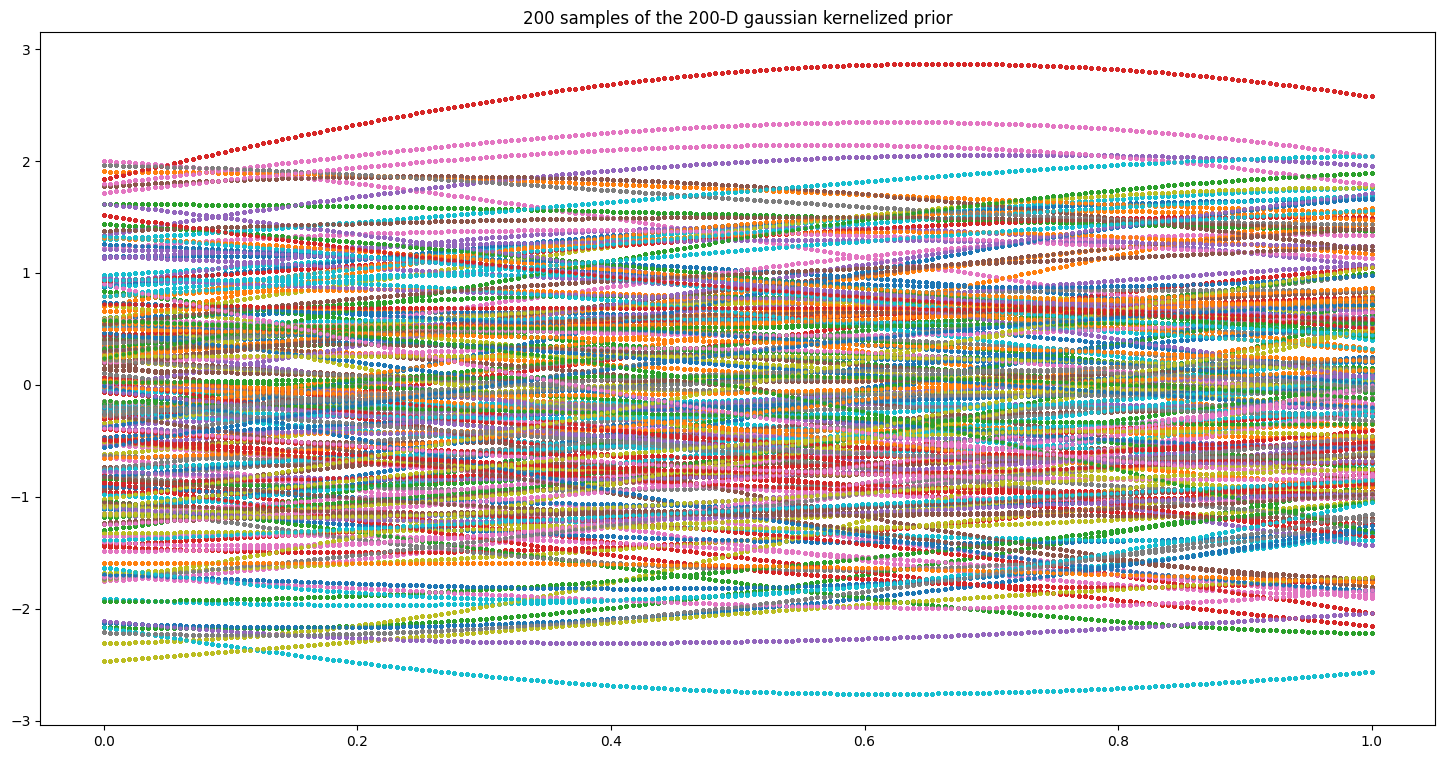

当我们提高多变量的维数,采样出来的点会构成一条平滑的线,类似于数学中的函数图像。简单来说,随着变量维度的增大,这些采样出的点会越来越不需要通过线段相互连接,它们自然而然地形成一条流畅的曲线。

而当维度趋向无穷大时,可以认为该空间中的每一点都代表了一个可能的输入。这里的"输入"指的是从这个无穷维度的高斯分布中可以采样到的任何一个点或者状态。换句话说,在无限维度的情况下,高斯分布描述的是一个有着无限多参数的函数。

下图是200D的200条曲线的采样。

这种无穷维的分布,方便我们根据既有数据的分布决定分布概率向哪个方向集中。这样只保留所感兴趣的参数维度。这便是Gaussian Process。

A Gaussian process is a probability distribution over possible functions that fit a set of points.

Gaussian Process

Stochastic process. describe systems randomly changing over time

Gaussian processes are distributions over functions $f(x)$ as:

where for any finite subset $X ={\mathbf{x}_1 \ldots \mathbf{x}_n }$ of the domain $x$,the marginal distribution is a multivariate Gaussian distribution:

This covariance function models the joint variability of the Gaussian process. choosing a specific kernel function $k$ it is possible to set prior information on this distribution.

Multivariate Gaussian Therom

Suppose $X=(x_1,x_2)$is jointly Gaussian with parameters:

where:

-

the marginal distribution of any subset of elements from a multivariate normal distribution is also normal

-

conditional distributions of a subset of the elements of a multivariate normal distribution (conditional on the remaining elements) are normal too.(the posterior conditionalis given by)

Or:

Noiseless GP regression

Observe a training set $\mathcal{D} = {(x_i, f_i)\, i=1:N}, f_i=f(x_i)$

GIven a test set $\mathbf{X}_$, predict the function outputs $\mathbf{f}_$

where $\quad$

Define the kernel:

Predictions:

Noisy GP regression

Numerical Computation for Noisy GP regression

- input: $X$ (inputs), $\mathbf{y}$ (targets), $k$ (covariance function), $\sigma_n^2$ (noise level), $\mathbf{x}_*$ (test input)

- steps:

- return: $\bar{f}_$ (mean), $\mathbb{V}[f_]$ (variance), $\log p(\mathbf{y} \mid X)$ (log marginal likelihood)

Tuning hyperparameters

\slug \slug \slug \slug \slug \slug \slug \slug \slug

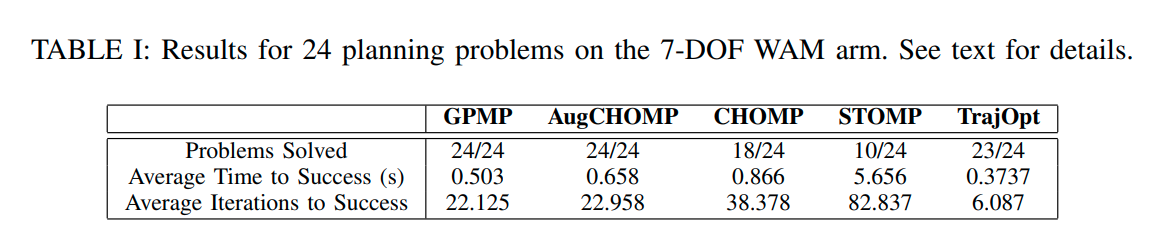

Gaussian Process Motion Planning

continuous-time trajectory as a sample from a Gaussian process (GP) generated by a linear time-varying stochastic differential equation

Experiment

(trajopt's efficiency is the best)

Ref

- PAPER

- Gaussian Processes for Regression:A Quick Introduction

- 2016 icra - Gaussian process motion planning

- 2016 rss - Motion Planning as Probabilistic Inference using Gaussian Processes and Factor Graphs

- 2018 Continuous time Gaussian process motion planning via probabilistic inference

- 2012 Optimal trajectories for time-critical street scenarios using discretized terminal manifolds

- PROJECT