02-classification

Classification problem. For now, we will focus on the binary classification problem in which $y$ can only takes two values, 0 and 1.

logistic regression

Change the form for our hypotheses $h_\theta(x)$:

where

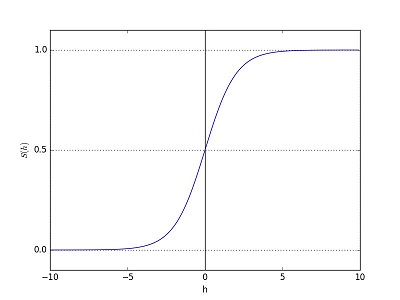

is called the sigmoid function or logistic function. Here is a plot showing $g(z)$:

We suppose y=1 when $h_\theta \ge 0.5$, which means $\theta^Tx \ge 0$. Consider logistic regression has two features $x_1$ and $x_2$, when $\theta^Tx = 0$, $\theta_0+\theta_1 x_1+\theta_2x_2=0$. So in the $x_1-x_2$ plane, the seperation is a line, which is called decision boundary. However, if two features with quadratic polynomial, the boundary is non-linear and may be a circle. In higher order polynomial, the shape is more complex.

The choice of the logistic function is a fairly natural one(why?). One useful property of the derivative of the sigmoid function:

Let us assume that:

or:

the maximum likelihood estimation:

This therefor gives us the stochastic ([sto'kæstɪk]随机的) gradient ascent rule:

It has identical expression compred with LMS.

In fact, they are all aimed to get the maximum likelihood. To get the maximum number, we are supposed to find the solution of the first-order derivative. So the problem is thansfered to finding the solution of $f(x)=0$. think about your Numerical Analysis(数值分析)

Considerating Newton's method:

a more general form (also called the Newton-Raphson method):

where $H$ is the Hessian matrix, whise entries are given by:

Newton's method typically enjorys faster convergence.

Fisher's LDA

linear discriminant analysis(线性判别分析)